|

STEP: A Scripting Language for Embodied Agents |

Home --- STEP --- Tool --- Paper --- Source --- XSTEP --- DLP --- Link |

|

STEP: A Scripting Language for Embodied Agents |

Home --- STEP --- Tool --- Paper --- Source --- XSTEP --- DLP --- Link |

STEP (Scripting Technology for Embodied Persona) is a scripting language for embodied agents, in particular for communicative acts of embodied agents. Scripting languages are to a certain extent simplified languages which ease the task of computation and reasoning. One of the main advantages of using scripting languages is that the specification of communicative acts can be separated from the programs which specify the agent architecture and other mental state reasoning. Thus, changing the specification of communicative acts doesn't require to re-program the agent.

STEP introduces a Prolog-like syntax, which makes it compatible with most standard logic programming languages, whereas the formal semantics of STEP is based on dynamic logic. Thus, STEP has a solid semantic foundation, in spite of a rich number of variants of the compositional operators and interaction facilities on the worlds.

Principle 1: Convenience

The specification of communicative acts, like gestures and facial expressions

usually involve a lot of geometrical data, like using ROUTE statements in VRML,

or movement equations, like those in the computer graphics.

A scripting language should hide those geometrical difficulties,

so that non-professional authors can use it in a natural way. For example,

suppose that authors want to specify that the agent turns his left arm forward slowly.

It can be specified like this:

It should not be necessary to specify as follows, which requires knowledge of a coordination system, rotation axis, etc.

Principle 2: Compositional Semantics

Specification of composite actions, based on existing components.

For example, an action of an agent which turns his arms forward slowly, can be defined

in terms of two primitive actions: turn-left-arm and turn-right-arm, like:

Principle 3: Re-definability Scripting actions (i.e.,composite actions), can be defined in terms of other defined actions explicitly. Namely, the scripting language should be a rule-based specification system. Scripting actions are defined with their own names. These defined actions can be re-used for other scripting actions. For example, if we have defined two scripting actions run and kick, then a new action run_then_kick can be defined in terms of run and kick :

which can be specified in a Prolog-like syntax:

Principle 4: Parametrization

Scripting actions can be adapted to be other actions. Namely, actions can be specified

in terms of how these actions cause changes over time to each individual degree of

freedom. For example, suppose

that we define a scripting action 'run': we know that running can be done at different

paces. It can be a 'fast-run' or 'slow-run'. We should not define all of the run actions

for particular paces. We can define the action 'run' with respect to a degree of freedom

'tempo'. Changing the tempo for a generic run action should be enough to achieve a run

action with different paces. Another method of parametrization is to introduce

variables or parameters in the names of scripting actions, which allows for a similar

action with different values. That is one of the reasons why we introduce Prolog-like syntax

in STEP.

Principle 5: Interaction

Scripting actions should be able to interact with, more exactly, perceive the world, including embodied

agents' mental states, to decide whether or not it would continue the current action,

or change to other actions, or stop the current action. This kind of interaction modes

can be achieved by the introduction of high-level interaction operators, as defined

in dynamic logic. The operator 'test' and the operator 'conditional', which are useful

for the interaction between the actions and the states.

Direction Reference

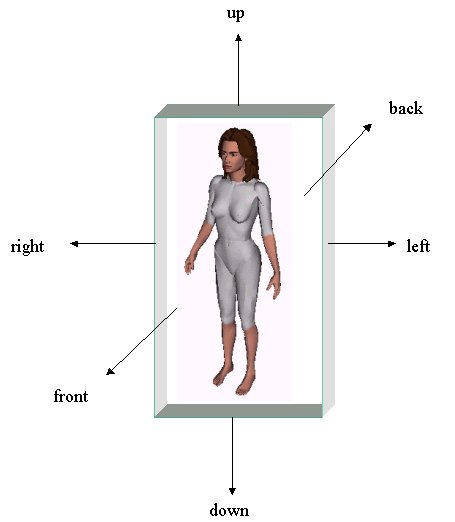

The reference system in STEP is based on H-anim specification: namely,

the initial humanoid position should be modeled in a standing position, facing in the +Z direction

with +Y up and +X to the humanoid's left. The origin ( 0, 0, 0) is located at

ground level, between the humanoid's feet. The arms should be straight and parallel to the sides

of the body with the palms of the hands facing inwards towards the thighs.

Based on the standard pose of the humanoid, we can define the direction reference system as sketched in the following figure.

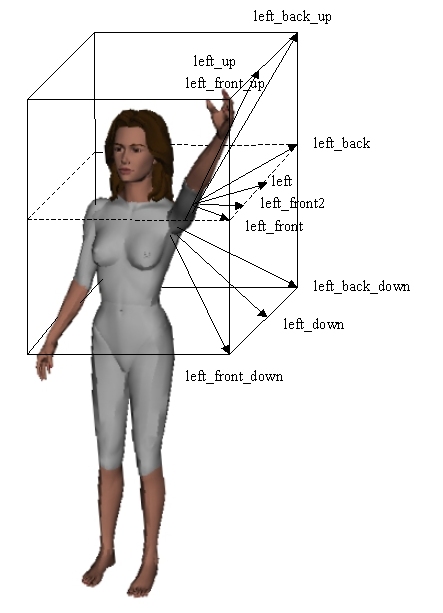

The direction reference system is based on these three dimensions: front vs. back which corresponds to the Z-axis, up vs. down which corresponds to the Y-axis, and left vs. right which corresponds to the X-axis. Based on these three dimensions, we can introduce a more natural-language-like direction reference scheme, say, turning left-arm to 'front-up', is to turn the left-arm such that the front-end of the arm will point to the up front direction. The following figure shows several combinations of directions based on these three dimensions for the left-arm. The direction references for other body parts are similar.

This natural-language-like references are convenient for authors to specify scripting actions, since they do not require the author to have a detailed knowledge of reference systems in VRML. Moreover, the proposed scripting language also supports the orginal VRML reference system, which is useful for experienced authors. Directions can also be specified to be a four-place tuple ( X, Y, Z, R), say, rotation(1,0,0,1.57).

Body Reference

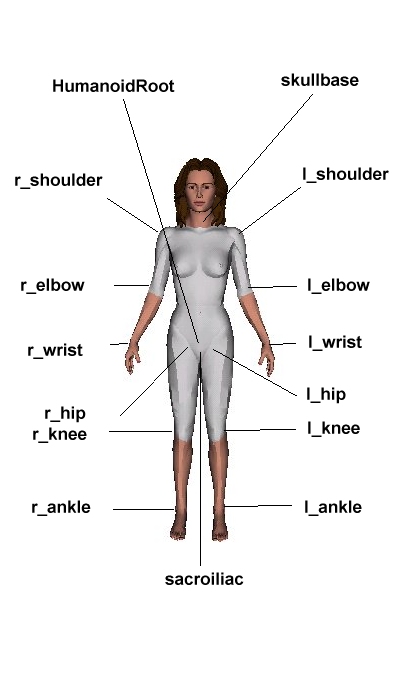

An H-anim specification contains a set of Joint nodes that are arranged to form a

hierarchy. Each Joint node can contain other Joint nodes and may also contain a Segment

node which describes the body part associated with that joint. Each Segment can also

have a number of Site nodes, which define locations relative to the segment. Sites can be

used for attaching accessories, like hat, clothing and jewelry.

In addition, they can be used to define eye points and viewpoint locations. Each Segment

node can have a number of Displacer nodes, that specify which vertices within the

segment correspond to a particular feature or configuration of vertices.

The following figure shows several typical joints of humanoids.

Therefore, turning body parts of humanoids implies the setting of the relevant joint's rotation. Body moving means the setting of the HumanoidRoot to a new position. For instance, the action 'turning the left-arm to the front slowly' is specified as:

Time Reference

STEP has the same time reference system as that in VRML. For example,

the action 'turning the left arm to the front in 2 seconds' can be specified as:

This kind of explicit specification of duration in scripting actions does not satisfy the parametrization principle. Therefore, we introduce a more flexible time reference system based on the notions of beat and tempo. A beat is a time interval for body movements, whereas the tempo is the number of beats per minute. By default, the tempo is set to 60. Namely, a beat corresponds to a second by default. However, the tempo can be changed. Moreover, we can define different speeds for body movements, say, the speed 'fast' can be defined as one beat, whereas the speed 'slow' can be defined as three beats.

where Direction can be a natural-language-like direction like 'front' or a rotation value like 'rotation(1,0,0,3.14)', Duration can be a speed name like 'fast' or an explicit time specification, like 'time(2,second)'.

A move action is defined as:

where Direction can be a natural-language-like direction, like 'front', a position value like 'position(1,0,10)', or an increment value like 'increment(1,0,0)'.

Here are typical composite operators for scripting actions:

High-level Interaction Operators

When using high-level interaction operators, scripting actions can directly interact with

internal states of embodies agents or with external states of worlds. These interaction

operators are based on a meta language which is used to build embodied agents, say, the

distributed logic programming language DLP.

Here are several higher-level interaction operators: